Been downloading and using more image maps for textures, when downloading couple sites have options as to what files to use, is there a real plus to downloading the TIFF files that are 4x bigger than the jpegs? I’m not talking small jpegs either, they are 8-16k resolution files and the jpegs pack usually is in the hundreds of mb’s whereas the TIFF files are usually over GB. just a lot to download if there isn’t any real need for the uncompressed TIFFs.

I’d be interested if someone chimes in who knows for sure about this. My speculation is 99% of the time the uncompressed TIFFs are bigger than necessary. Don’t think they’ll help much. I think greater bit depth is really most important for Normal maps, Displacement maps and dynamic range if you’ll be pushing/pulling those values in the rendering app. Otherwise, I can’t imageine it’s going to be of any benefit.

But I’m also open to being corrected if need be.

That’s what I was thinking. I downloaded the same texture set as JPG and TIFF and was going to do some playing to see what there is. I know if I was doing GPU rendering the JPEGS would def be advantageous due to memory constraints. With FriendlyShade, you can actually choose which file format you want for each map and DL them as a zip, so if needed that might be the way to go for the normal and displacement maps like you said.

TIFF’s are not really uncompressed but they have lossless compression like ZIP/LZH. Basically a kind of compression that doesn’t reduce quality. At least, I can’t imagine the TIFFs are really uncompressed since that would cost an enormous amount of data with the only benefit that they will load a bit quicker in programs like Photoshop.

JPEG compression is lossy, you’ll lose quality. For a diffuse/colour texture that doesn’t really matter to much as long as the JPEG quality is a bit ok. But for normal maps, displacement maps and roughness maps it does matter a lot. You don’t want JPEG artifacts ruin your roughness or have an impact on the displacement since that would be visible.

Someone has to correct me if I’m wrong but as far as I know a GPU can’t do much with both TIFFs and JPGs. All images offered to a GPU will be converted to DDS files (that’s the waiting time ahead of a GPU render). Which are mostly lossy compressed files but with a lot of different ‘tastes’ as it is about alpha channels, bit depth etc.

I worked originally in print and therefor I hate JPG’s as starting material. Because it’s already a reduced quality image and will lose quality again if converted to DDS.

I won’t care that much if it’s only the colour/diffuse which is JPG but especially the other texture maps need to be in high quality else you will absolutely see the difference. Especially with displace/normal maps. Which is also the reason they are mostly in 16-bit instead of 8-bit. That’s another thing why you don’t want JPG’s, they are always 8-bit.

If you don’t need the higher bit depth that TIFF can offer, high quality JPEG is the sensible choice

Like Will mentions, 16-bit or float (32-bit) images do have advantages when used for normal maps and displacement maps. So for those use cases, TIFF, but perhaps preferably EXR, is the better choice. For diffuse textures, the higher bit depths have very limited returns.

In terms of memory usage, and this applies to both CPU and GPU rendering, the image format at a given bit depth doesn’t matter. KeyShot always stores all textures uncompressed in memory. The bit depth does matter for memory usage though.

One scenario where using TIFF would make a lot of sense is mipmapping. Tiled TIFF is a format where multiple resolutions of the same texture can be stored as “tiles” in a single TIFF file. This allows textures to be loaded at a smaller resolution for objects that are farther away from the camera. This helps avoid issues like moiré patterns, that can occur with very fine textures.

KeyShot doesn’t currently support mipmapping though…

I hope this helps!

Dries

Oscar outlined nicely where JPEG images shouldn’t be used and why.

EDIT:

KeyShot has some support for loading DDS textures, but the maps are still stored uncompressed in memory for the renderer (CPU and GPU).

Ah @dries.vervoort that’s interesting. Can that be the reason Keyshot running out of GPU memory quite soon compared to other GPU-renderers? I did some testing in this topic: https://community.keyshot.com/forum/t/link-properties-of-items-in-model-sets/61/3?u=oscar.rottink

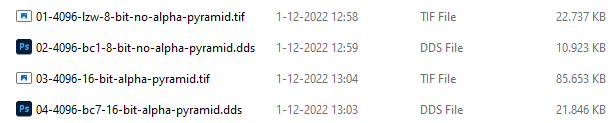

It would make sense since TIFFs are so much bigger than a DDS in the right format. Especially with 16-bit and alpha compared to DDS as you see in this image (where I did use LZW compression)

I would be interested in how Keyshot handles textures for GPU renders because I might be able to use certain file-formats, so I don’t run into GPU memory limits really soon (and unexpected).

It might be bit technical for a lot of users but I think it helps if I know in what formats the different texture layers get offered to the GPU. Especially with materials which use a lot of different image layers it’s quite easy to have one material consume an enormous amount of GPU memory.

- the ‘pyramid’ for the DDS is mipmapping on auto, guess around 12 sizes with 4096 base resolution.

Thanks everyone, good stuff. Anyone have a good resource to show how/why higher bit depths are better for certain textures? My very simplistic view of it is that the higher bit depths have more color “steps” than a monitor can display, so that’s why you can’t see it, but when you use that extra invisible data to affect other more visible things, that’s when it counts (for some reason).

Yes, exactly that actually

Part of the explanation is also that, unless the texture is used for the diffuse channel, the “color” information of the texture is not really color, but linear data.

This applies when the texture is used for any channel that isn’t diffuse: specular, roughness, normal, opacity, metalness, displace etc.

So higher bit depth images do indeed provide more “steps”, more granular information, that the shaders can work with. You can very easily see this for displacement, but it affects all data channels.

An 8 bit image provides only 256 steps for roughness, metalness, displacement etc.

So imagine you create a seemingly smooth gradient in an image editor from black to white in 8 bit (well, the gradient will show banding on most monitors…), and use it for displacing a 1 x 1 m plane with a displace height of 1 m. What you get is basically a stairway with 256 steps, instead of a smooth slope.

Now make the same gradient texture in 32 bit, and you will get a smooth slope once displaced.

Since non-color textures are linear data, it is also important to note that those textures should be interpreted as having a gamma of 1. This means setting the Contrast for the texture to 0!